General solution of a heterogeneous system. Homogeneous systems of linear equations Solution of homogeneous systems 0

The linear equation is called homogeneous, if its free term is equal to zero, and inhomogeneous otherwise. A system consisting of homogeneous equations is called homogeneous and has general form:

It is obvious that every homogeneous system is consistent and has a zero (trivial) solution. Therefore, in relation to homogeneous systems linear equations one often has to look for an answer to the question of the existence of non-zero solutions. The answer to this question can be formulated as the following theorem.

Theorem . A homogeneous system of linear equations has a nonzero solution if and only if its rank is less than the number of unknowns .

Proof: Let us assume that a system whose rank is equal has a non-zero solution. Obviously it does not exceed . In case the system has a unique solution. Since a system of homogeneous linear equations always has a zero solution, then the zero solution will be this unique solution. Thus, non-zero solutions are possible only for .

Corollary 1 : A homogeneous system of equations, in which the number of equations is less than the number of unknowns, always has a non-zero solution.

Proof: If a system of equations has , then the rank of the system does not exceed the number of equations, i.e. . Thus, the condition is satisfied and, therefore, the system has a non-zero solution.

Corollary 2 : A homogeneous system of equations with unknowns has a nonzero solution if and only if its determinant is zero.

Proof: Let us assume that a system of linear homogeneous equations, the matrix of which with the determinant , has a non-zero solution. Then, according to the proven theorem, and this means that the matrix is singular, i.e. .

Kronecker-Capelli theorem: An SLU is consistent if and only if the rank of the system matrix is equal to the rank of the extended matrix of this system. A system ur is called consistent if it has at least one solution.Homogeneous system of linear algebraic equations .

A system of m linear equations with n variables is called a system of linear homogeneous equations if all free terms are equal to 0. A system of linear homogeneous equations is always consistent, because it always has at least a zero solution. A system of linear homogeneous equations has a non-zero solution if and only if the rank of its matrix of coefficients for variables is less than the number of variables, i.e. for rank A (n. Any linear combination

Lin system solutions. homogeneous. ur-ii is also a solution to this system.

A system of linear independent solutions e1, e2,...,еk is called fundamental if each solution of the system is a linear combination of solutions. Theorem: if the rank r of the matrix of coefficients for the variables of a system of linear homogeneous equations is less than the number of variables n, then every fundamental system of solutions to the system consists of n-r solutions. That's why common decision Lin systems one-day ur-th has the form: c1e1+c2e2+...+skek, where e1, e2,..., ek is any fundamental system of solutions, c1, c2,...,ck are arbitrary numbers and k=n-r. The general solution of a system of m linear equations with n variables is equal to the sum

of the general solution of the system corresponding to it is homogeneous. linear equations and an arbitrary particular solution of this system.

7. Linear spaces. Subspaces. Basis, dimension. Linear shell. Linear space is called n-dimensional, if it contains a system of linear independent vectors, and any system from more vectors are linearly dependent. The number is called dimension (number of dimensions) linear space and is denoted by . In other words, the dimension of a space is the maximum number of linearly independent vectors of this space. If such a number exists, then the space is called finite-dimensional. If for anyone natural number n in space there is a system consisting of linearly independent vectors, then such a space is called infinite-dimensional (written: ). In what follows, unless otherwise stated, finite-dimensional spaces will be considered.

The basis of an n-dimensional linear space is an ordered collection of linearly independent vectors ( basis vectors).

Theorem 8.1 on the expansion of a vector in terms of a basis. If is the basis of an n-dimensional linear space, then any vector can be represented as a linear combination of basis vectors:

V=v1*e1+v2*e2+…+vn+en

and, moreover, in the only way, i.e. the coefficients are determined uniquely. In other words, any vector of space can be expanded into a basis and, moreover, in a unique way.

Indeed, the dimension of space is . The system of vectors is linearly independent (this is a basis). After adding any vector to the basis, we obtain a linearly dependent system (since this system consists of vectors of n-dimensional space). Using the property of 7 linearly dependent and linearly independent vectors, we obtain the conclusion of the theorem.

Kaluga branch of the federal state budgetary educational institution of higher professional education

"Moscow State Technical University named after N.E. Bauman"

(Kharkov Branch of Moscow State Technical University named after N.E. Bauman)

Vlaykov N.D.

Solution of homogeneous SLAEs

Guidelines for conducting exercises

on the course of analytical geometry

Kaluga 2011

Lesson objectives page 4

Lesson plan page 4

Necessary theoretical information p.5

Practical part p.10

Monitoring the mastery of the material covered p. 13

Homework p.14

Number of hours: 2

Lesson objectives:

Systematize the acquired theoretical knowledge about the types of SLAEs and methods for solving them.

Gain skills in solving homogeneous SLAEs.

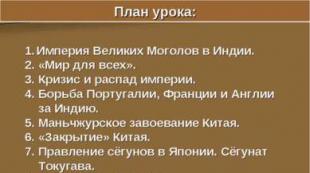

Lesson plan:

Briefly outline the theoretical material.

Solve a homogeneous SLAE.

Find the fundamental system of solutions of a homogeneous SLAE.

Find a particular solution of a homogeneous SLAE.

Formulate an algorithm for solving a homogeneous SLAE.

Check your current homework.

Carry out verification work.

Present the topic of the next seminar.

Submit current homework.

Necessary theoretical information.

Matrix rank.

Def. The rank of a matrix is the number that is equal to the maximum order among its non-zero minors. The rank of the matrix is denoted by .

If a square matrix is non-singular, then its rank is equal to its order. If a square matrix is singular, then its rank is less than its order.

The rank of a diagonal matrix is equal to the number of its non-zero diagonal elements.

Theor. When a matrix is transposed, its rank does not change, i.e.  .

.

Theor. The rank of a matrix does not change with elementary transformations of its rows and columns.

The theorem on the basis minor.

Def. Minor  matrices

matrices  is called basic if two conditions are met:

is called basic if two conditions are met:

a) it is not equal to zero;

b) its order is equal to the rank of the matrix  .

.

Matrix  may have several basis minors.

may have several basis minors.

Matrix rows and columns  , in which the selected basic minor is located, are called basic.

, in which the selected basic minor is located, are called basic.

Theor. The theorem on the basis minor. Basic rows (columns) of the matrix  , corresponding to any of its basis minors

, corresponding to any of its basis minors  , are linearly independent. Any rows (columns) of the matrix

, are linearly independent. Any rows (columns) of the matrix  , not included in

, not included in  , are linear combinations of the basis rows (columns).

, are linear combinations of the basis rows (columns).

Theor. For any matrix, its rank is equal to the maximum number of its linearly independent rows (columns).

Calculating the rank of a matrix. Method of elementary transformations.

Using elementary row transformations, any matrix can be reduced to echelon form. The rank of a step matrix is equal to the number of non-zero rows. The basis in it is the minor, located at the intersection of non-zero rows with the columns corresponding to the first non-zero elements from the left in each of the rows.

SLAU. Basic definitions.

Def. System

(15.1)

(15.1)

Numbers  are called SLAE coefficients. Numbers

are called SLAE coefficients. Numbers  are called free terms of equations.

are called free terms of equations.

The SLAE entry in the form (15.1) is called coordinate.

Def. A SLAE is called homogeneous if  . Otherwise it is called heterogeneous.

. Otherwise it is called heterogeneous.

Def. A solution to an SLAE is such a set of values of unknowns, upon substitution of which each equation of the system turns into an identity. Any specific solution of an SLAE is also called its particular solution.

Solving SLAE means solving two problems:

Find out whether the SLAE has solutions;

Find all solutions if they exist.

Def. An SLAE is called joint if it has at least one solution. Otherwise, it is called incompatible.

Def. If the SLAE (15.1) has a solution, and, moreover, a unique one, then it is called definite, and if the solution is not unique, then it is called indefinite.

Def. If in equation (15.1)  ,The SLAE is called square.

,The SLAE is called square.

SLAU recording forms.

In addition to the coordinate form (15.1), SLAE records are often used in other representations.

(15.2)

(15.2)

The relation is called the vector form of SLAE notation.

If we take the product of matrices as a basis, then SLAE (15.1) can be written as follows:

(15.3)

(15.3)

or  .

.

The notation of SLAE (15.1) in the form (15.3) is called matrix.

Homogeneous SLAEs.

Homogeneous system  linear algebraic equations with

linear algebraic equations with  unknowns is a system of the form

unknowns is a system of the form

Homogeneous SLAEs are always consistent, since there is always a zero solution.

Criterion for the existence of a non-zero solution. For a nonzero solution to exist for a homogeneous square SLAE, it is necessary and sufficient that its matrix be singular.

Theor. If the columns  ,

,

,

…,

,

…,

- solutions of a homogeneous SLAE, then any linear combination of them is also a solution to this system.

- solutions of a homogeneous SLAE, then any linear combination of them is also a solution to this system.

Consequence. If a homogeneous SLAE has a non-zero solution, then it has an infinite number of solutions.

It is natural to try to find such solutions  ,

,

,

…,

,

…,

systems so that any other solution is represented as a linear combination of them and, moreover, in a unique way.

systems so that any other solution is represented as a linear combination of them and, moreover, in a unique way.

Def. Any set of  linearly independent columns

linearly independent columns  ,

,

,

…,

,

…, , which are solutions of a homogeneous SLAE

, which are solutions of a homogeneous SLAE  , Where

, Where  - the number of unknowns, and

- the number of unknowns, and  - the rank of its matrix

- the rank of its matrix  , is called the fundamental system of solutions of this homogeneous SLAE.

, is called the fundamental system of solutions of this homogeneous SLAE.

When studying and solving homogeneous systems of linear equations, we will fix the basis minor in the matrix of the system. The basis minor will correspond to basis columns and, therefore, basis unknowns. We will call the remaining unknowns free.

Theor. On the structure of the general solution of a homogeneous SLAE. If  ,

,

,

…,

,

…, - arbitrary fundamental system of solutions of a homogeneous SLAE

- arbitrary fundamental system of solutions of a homogeneous SLAE  , then any of its solutions can be represented in the form

, then any of its solutions can be represented in the form

Where  ,

…,

,

…, - some are permanent.

- some are permanent.

That. the general solution of a homogeneous SLAE has the form

Practical part.

Consider possible sets of solutions of the following types of SLAEs and their graphical interpretation.

;

;

;

;

.

.

Consider the possibility of solving these systems using Cramer’s formulas and the matrix method.

Explain the essence of the Gauss method.

Solve the following problems.

Example 1. Solve a homogeneous SLAE. Find FSR.

.

.

Let us write down the matrix of the system and reduce it to stepwise form.

.

.

the system will have infinitely many solutions. The FSR will consist of

the system will have infinitely many solutions. The FSR will consist of  columns.

columns.

Let's discard the zero lines and write the system again:

.

.

We will consider the basic minor to be in the upper left corner. That.  - basic unknowns, and

- basic unknowns, and  - free. Let's express

- free. Let's express  through free

through free  :

:

;

;

Let's put  .

.

Finally we have:

- coordinate form of the answer, or

- coordinate form of the answer, or

- matrix form of the answer, or

- matrix form of the answer, or

- vector form of the answer (vector - columns are FSR columns).

- vector form of the answer (vector - columns are FSR columns).

Algorithm for solving a homogeneous SLAE.

Find the FSR and the general solution of the following systems:

№2.225(4.39)

. Answer:

. Answer:

№2.223(2.37)

. Answer:

. Answer:

№2.227(2.41)

. Answer:

. Answer:

Solve a homogeneous SLAE:

. Answer:

. Answer:

Solve a homogeneous SLAE:

. Answer:

. Answer:

Presentation of the topic of the next seminar.

Solving systems of linear inhomogeneous equations.

Monitoring the mastery of the material covered.

Test work 3 - 5 minutes. 4 students participate with odd numbers in the journal, starting from No. 10

|

Follow these steps:

|

Follow these steps:

|

|

|

Calculate the determinant:

|

|

Follow these steps:

|

Follow these steps:

|

|

Find the inverse matrix of this one:

|

Calculate the determinant:

|

Homework:

1. Solve problems:

№ 2.224, 2.226, 2.228, 2.230, 2.231, 2.232.

2.Work through lectures on the following topics:

Systems of linear algebraic equations (SLAEs). Coordinate, matrix and vector forms of recording. Kronecker-Capelli criterion for compatibility of SLAEs. Heterogeneous SLAEs. A criterion for the existence of a non-zero solution of a homogeneous SLAE. Properties of solutions of a homogeneous SLAE. Fundamental system of solutions of a homogeneous SLAE, the theorem on its existence. Normal fundamental system of solutions. Theorem on the structure of the general solution of a homogeneous SLAE. Theorem on the structure of the general solution of an inhomogeneous SLAE.

A system of linear equations in which all free terms are equal to zero is called homogeneous :

Any homogeneous system is always consistent, since it always has zero (trivial ) solution. The question arises under what conditions will a homogeneous system have a nontrivial solution.

Theorem 5.2.A homogeneous system has a nontrivial solution if and only if the rank of the underlying matrix is less than the number of its unknowns.

Consequence. A square homogeneous system has a nontrivial solution if and only if the determinant of the main matrix of the system is not equal to zero.

Example 5.6. Determine the values of the parameter l at which the system has nontrivial solutions, and find these solutions:

Solution. This system will have a non-trivial solution when the determinant of the main matrix is equal to zero:

Thus, the system is non-trivial when l=3 or l=2. For l=3, the rank of the main matrix of the system is 1. Then, leaving only one equation and assuming that y=a And z=b, we get x=b-a, i.e.

For l=2, the rank of the main matrix of the system is 2. Then, choosing the minor as the basis:

we get a simplified system

From here we find that x=z/4, y=z/2. Believing z=4a, we get

The set of all solutions of a homogeneous system has a very important linear property : if columns X 1 and X 2 - solutions to a homogeneous system AX = 0, then any linear combination of them a X 1 + b X 2 will also be a solution to this system. Indeed, since AX 1 = 0 And AX 2 = 0 , That A(a X 1 + b X 2) = a AX 1 + b AX 2 = a · 0 + b · 0 = 0. It is because of this property that if a linear system has more than one solution, then there will be an infinite number of these solutions.

Linearly independent columns E 1 , E 2 , Ek, which are solutions of a homogeneous system, are called fundamental system of solutions homogeneous system of linear equations if the general solution of this system can be written as a linear combination of these columns:

If a homogeneous system has n variables, and the rank of the main matrix of the system is equal to r, That k = n-r.

Example 5.7. Find the fundamental system of solutions to the following system of linear equations:

Solution. Let's find the rank of the main matrix of the system:

Thus, the set of solutions to this system of equations forms a linear subspace of dimension n-r= 5 - 2 = 3. Let’s choose minor as the base

Then, leaving only the basic equations (the rest will be a linear combination of these equations) and the basic variables (we move the rest, the so-called free variables to the right), we obtain a simplified system of equations:

Believing x 3 = a, x 4 = b, x 5 = c, we find

Believing a= 1, b = c= 0, we obtain the first basic solution; believing b= 1, a = c= 0, we obtain the second basic solution; believing c= 1, a = b= 0, we obtain the third basic solution. As a result, the normal fundamental system of solutions will take the form

Using the fundamental system, the general solution of a homogeneous system can be written as

X = aE 1 + bE 2 + cE 3. a

Let us note some properties of solutions to an inhomogeneous system of linear equations AX=B and their relationship with the corresponding homogeneous system of equations AX = 0.

General solution of an inhomogeneous systemis equal to the sum of the general solution of the corresponding homogeneous system AX = 0 and an arbitrary particular solution of the inhomogeneous system. Indeed, let Y 0 is an arbitrary particular solution of an inhomogeneous system, i.e. AY 0 = B, And Y- general solution of a heterogeneous system, i.e. AY=B. Subtracting one equality from the other, we get

A(Y-Y 0) = 0, i.e. Y-Y 0 is the general solution of the corresponding homogeneous system AX=0. Hence, Y-Y 0 = X, or Y=Y 0 + X. Q.E.D.

Let the inhomogeneous system have the form AX = B 1 + B 2 . Then the general solution of such a system can be written as X = X 1 + X 2 , where AX 1 = B 1 and AX 2 = B 2. This property expresses the universal property of any linear systems(algebraic, differential, functional, etc.). In physics this property is called superposition principle, in electrical and radio engineering - principle of superposition. For example, in the theory of linear electrical circuits, the current in any circuit can be obtained as the algebraic sum of the currents caused by each energy source separately.

Homogeneous system of linear equations AX = 0 always together. It has non-trivial (non-zero) solutions if r= rank A< n .

For homogeneous systems, the basic variables (the coefficients of which form the basic minor) are expressed through free variables by relations of the form:

Then n-r Linearly independent vector solutions will be:

and any other solution is a linear combination of them. Vector solutions ![]() form a normalized fundamental system.

form a normalized fundamental system.

In a linear space, the set of solutions to a homogeneous system of linear equations forms a subspace of dimension n-r; ![]() - the basis of this subspace.

- the basis of this subspace.

System m linear equations with n unknown(or, linear system

|

Here x 1 , x 2 , …, x n a 11 , a 12 , …, a mn- system coefficients - and b 1 , b 2 , … b m a iji) and unknown ( j

System (1) is called homogeneousb 1 = b 2 = … = b m= 0), otherwise - heterogeneous.

System (1) is called square, if number m equations equal to the number n unknown.

Solution systems (1) - set n numbers c 1 , c 2 , …, c n, such that the substitution of each c i instead of x i into system (1) turns all its equations into identities.

Solution systems (1) - set n numbers c 1 , c 2 , …, c n, such that the substitution of each c i instead of x i into system (1) turns all its equations into identities.

System (1) is called joint non-joint

Solutions c 1 (1) , c 2 (1) , …, c n(1) and c 1 (2) , c 2 (2) , …, c n various

| c 1 (1) = c 1 (2) , c 2 (1) = c 2 (2) , …, c n (1) = c n (2) . |

certain uncertain. If there are more equations than unknowns, it is called redefined.

Solving systems of linear equations

Solving matrix equations ~ Gauss method

Methods for solving systems of linear equations are divided into two groups:

1. precise methods, which are finite algorithms for calculating the roots of a system (solving systems using an inverse matrix, Cramer’s rule, Gauss’s method, etc.),

2. iterative methods, which make it possible to obtain a solution to the system with a given accuracy through convergent iterative processes (iteration method, Seidel method, etc.).

Due to inevitable rounding, the results of even exact methods are approximate. When using iterative methods, in addition, the error of the method is added.

The effective use of iterative methods significantly depends on the successful choice of the initial approximation and the speed of convergence of the process.

Solving matrix equations

Consider the system n linear algebraic equations with respect to n unknown X 1 , X 2 , …, x n:

. .

| (15) |

Matrix A, the columns of which are the coefficients for the corresponding unknowns, and the rows are the coefficients for the unknowns in the corresponding equation, is called matrix of the system; matrix-column b, the elements of which are the right-hand sides of the equations of the system, is called right-hand side matrix or simply right side systems. Column matrix X, whose elements are the unknown unknowns, is called system solution.

If matrix A- non-special, that is, det A n e is equal to 0, then system (13), or the matrix equation (14) equivalent to it, has a unique solution.

In fact, provided det A is not equal 0 exists inverse matrix A-1 . Multiplying both sides of equation (14) by the matrix A-1 we get:

| (16) |

Formula (16) gives a solution to equation (14) and it is unique.

It is convenient to solve systems of linear equations using the function lsolve.

lsolve( A, b)

The solution vector is returned x such that Oh= b.

Arguments:

A- square, non-singular matrix.

b- a vector having the same number of rows as there are rows in the matrix A .

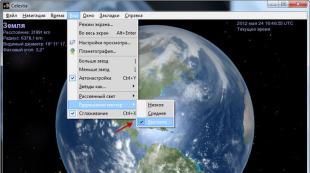

Figure 8 shows the solution to a system of three linear equations in three unknowns.

Gauss method

The Gaussian method, also called the Gaussian elimination method, consists in the fact that system (13) is reduced by sequential elimination of unknowns to an equivalent system with a triangular matrix:

In matrix notation, this means that first (the direct approach of the Gaussian method), by elementary operations on rows, the extended matrix of the system is reduced to a stepwise form:

and then (reverse of the Gaussian method) this step matrix is transformed so that in the first n columns we get a unit matrix:

.

.

Last, ( n+ 1) the column of this matrix contains the solution to system (13).

In Mathcad, the forward and backward moves of the Gaussian method are performed by the function rref(A).

Figure 9 shows the solution of a system of linear equations by the Gaussian method, which uses following functions:

rref( A)

The step form of the matrix is returned A.

augment( A, IN)

Returns an array formed by the location A And IN side by side. Arrays A And IN must have the same number of lines.

submatrix( A, ir, jr, ic, jc)

Returns a submatrix consisting of all elements with ir By jr and columns with ic By jc. Make sure that ir jr And

ic jc, otherwise the order of the rows and/or columns will be reversed.

Figure 9.

Description of the method

For a system of n linear equations with n unknowns (over an arbitrary field)

with the determinant of the system matrix Δ different from zero, the solution is written in the form

with the determinant of the system matrix Δ different from zero, the solution is written in the form

(the i-th column of the system matrix is replaced by a column of free terms).

In another form, Cramer’s rule is formulated as follows: for any coefficients c1, c2, ..., cn the following equality holds:

In this form, Cramer's formula is valid without the assumption that Δ is different from zero; it is not even necessary that the coefficients of the system be elements of an integral ring (the determinant of the system can even be a divisor of zero in the coefficient ring). We can also assume that either the sets b1,b2,...,bn and x1,x2,...,xn, or the set c1,c2,...,cn, do not consist of elements of the coefficient ring of the system, but some module above this ring. In this form, Cramer's formula is used, for example, in the proof of the formula for the Gram determinant and Nakayama's Lemma.

| 35) Kronecker-Capelli theorem |

In order for a system of m inhomogeneous linear equations in n unknowns to be consistent, it is necessary and sufficient that Proof of necessity. Let system (1.13) be consistent, that is, there exist such numbers X 1 =α

1 , X 2 =α

2 , …, x n = α n , What  (1.15) Let us subtract from the last column of the extended matrix its first column, multiplied by α 1, the second – by α 2, …, nth – multiplied by α n, that is, from the last column of matrix (1.14) we should subtract the left sides of the equalities ( 1.15). Then we get the matrix (1.15) Let us subtract from the last column of the extended matrix its first column, multiplied by α 1, the second – by α 2, …, nth – multiplied by α n, that is, from the last column of matrix (1.14) we should subtract the left sides of the equalities ( 1.15). Then we get the matrix  whose rank will not change as a result of elementary transformations and . But it is obvious, and therefore proof of sufficiency. Let and for definiteness let a non-zero minor of order r be located in the upper left corner of the matrix: whose rank will not change as a result of elementary transformations and . But it is obvious, and therefore proof of sufficiency. Let and for definiteness let a non-zero minor of order r be located in the upper left corner of the matrix:  This means that the remaining rows of the matrix can be obtained as linear combinations first r lines, that is m-r lines matrices can be represented as sums of the first r rows multiplied by some numbers. But then the first r equations of system (1.13) are independent, and the rest are their consequences, that is, the solution to the system of the first r equations is automatically a solution to the remaining equations. There are two possible cases. 1. r=n. Then the system consisting of the first r equations has the same number of equations and unknowns and is consistent, and its solution is unique. 2.r This means that the remaining rows of the matrix can be obtained as linear combinations first r lines, that is m-r lines matrices can be represented as sums of the first r rows multiplied by some numbers. But then the first r equations of system (1.13) are independent, and the rest are their consequences, that is, the solution to the system of the first r equations is automatically a solution to the remaining equations. There are two possible cases. 1. r=n. Then the system consisting of the first r equations has the same number of equations and unknowns and is consistent, and its solution is unique. 2.r |

36) certainty, uncertainty

System m linear equations with n unknown(or, linear system) in linear algebra is a system of equations of the form

| |

Here x 1 , x 2 , …, x n- unknowns that need to be determined. a 11 , a 12 , …, a mn- system coefficients - and b 1 , b 2 , … b m- free members - are assumed to be known. Coefficient indices ( a ij) systems denote equation numbers ( i) and unknown ( j), at which this coefficient stands, respectively.

System (1) is called homogeneous, if all its free terms are equal to zero ( b 1 = b 2 = … = b m= 0), otherwise - heterogeneous.

System (1) is called joint, if it has at least one solution, and non-joint, if she doesn’t have a single solution.

A joint system of type (1) may have one or more solutions.

Solutions c 1 (1) , c 2 (1) , …, c n(1) and c 1 (2) , c 2 (2) , …, c n(2) joint systems of the form (1) are called various, if at least one of the equalities is violated:

| c 1 (1) = c 1 (2) , c 2 (1) = c 2 (2) , …, c n (1) = c n (2) . |

A joint system of the form (1) is called certain, if it has a unique solution; if it has at least two different solutions, then it is called uncertain

37) Solving systems of linear equations using the Gauss method

Let the original system look like this

Matrix A is called the main matrix of the system, b- column of free members.

Then, according to the property of elementary transformations over rows, the main matrix of this system can be reduced to echelon form (the same transformations must be applied to the column of free terms):

Then, according to the property of elementary transformations over rows, the main matrix of this system can be reduced to echelon form (the same transformations must be applied to the column of free terms):

Then the variables are called main variables. All others are called free.

[edit]Condition of compatibility

The above condition for all can be formulated as a necessary and sufficient condition for compatibility:

Recall that the rank of a joint system is the rank of its main matrix (or extended matrix, since they are equal).

Algorithm

Description

The algorithm for solving SLAEs using the Gaussian method is divided into two stages.

§ At the first stage, the so-called direct move is carried out, when, through elementary transformations over the rows, the system is brought to a stepped or triangular form, or it is established that the system is incompatible. Namely, among the elements of the first column of the matrix, select a non-zero one, move it to the uppermost position by rearranging the rows, and subtract the resulting first row from the remaining rows after the rearrangement, multiplying it by a value equal to the ratio of the first element of each of these rows to the first element of the first row, zeroing thus the column below it. After these transformations have been completed, the first row and first column are mentally crossed out and continued until a zero-size matrix remains. If at any iteration there is no non-zero element among the elements of the first column, then go to the next column and perform a similar operation.

§ At the second stage, the so-called reverse move is carried out, the essence of which is to express all the resulting basic variables in terms of non-basic ones and construct a fundamental system of solutions, or, if all the variables are basic, then express numerically the only solution to the system of linear equations. This procedure begins with the last equation, from which the corresponding basic variable is expressed (and there is only one) and substituted into the previous equations, and so on, going up the “steps”. Each line corresponds to exactly one basis variable, so at each step except the last (topmost), the situation exactly repeats the case of the last line.

Gaussian method requires order O(n 3) actions.

This method relies on:

38)Kronecker-Capelli theorem.

A system is consistent if and only if the rank of its main matrix is equal to the rank of its extended matrix.

Purpose of the service. The online calculator is designed to find a non-trivial and fundamental solution to the SLAE. The resulting solution is saved in a Word file (see example solution).

Instructions. Select matrix dimension:

Properties of systems of linear homogeneous equations

In order for the system to have non-trivial solutions, it is necessary and sufficient that the rank of its matrix be less than the number of unknowns.Theorem. A system in the case m=n has a nontrivial solution if and only if the determinant of this system is equal to zero.

Theorem. Any linear combination of solutions to a system is also a solution to that system.

Definition. The set of solutions to a system of linear homogeneous equations is called fundamental system of solutions, if this set consists of linearly independent solutions and any solution to the system is a linear combination of these solutions.

Theorem. If the rank r of the system matrix is less than the number n of unknowns, then there exists a fundamental system of solutions consisting of (n-r) solutions.

Algorithm for solving systems of linear homogeneous equations

- Finding the rank of the matrix.

- We select the basic minor. We distinguish dependent (basic) and free unknowns.

- We cross out those equations of the system whose coefficients are not included in the basis minor, since they are consequences of the others (according to the theorem on the basis minor).

- We move the terms of the equations containing free unknowns to the right side. As a result, we obtain a system of r equations with r unknowns, equivalent to the given one, the determinant of which is nonzero.

- We solve the resulting system by eliminating unknowns. We find relationships expressing dependent variables through free ones.

- If the rank of the matrix is not equal to the number of variables, then we find the fundamental solution of the system.

- In the case rang = n we have a trivial solution.

Example. Find the basis of the system of vectors (a 1, a 2,...,a m), rank and express the vectors based on the base. If a 1 =(0,0,1,-1), and 2 =(1,1,2,0), and 3 =(1,1,1,1), and 4 =(3,2,1 ,4), and 5 =(2,1,0,3).

Let's write down the main matrix of the system:

Multiply the 3rd line by (-3). Let's add the 4th line to the 3rd:

| 0 | 0 | 1 | -1 |

| 0 | 0 | -1 | 1 |

| 0 | -1 | -2 | 1 |

| 3 | 2 | 1 | 4 |

| 2 | 1 | 0 | 3 |

Multiply the 4th line by (-2). Let's multiply the 5th line by (3). Let's add the 5th line to the 4th:

Let's add the 2nd line to the 1st:

Let's find the rank of the matrix.

The system with the coefficients of this matrix is equivalent to the original system and has the form:

- x 3 = - x 4

- x 2 - 2x 3 = - x 4

2x 1 + x 2 = - 3x 4

Using the method of eliminating unknowns, we find a nontrivial solution:

We obtained relations expressing the dependent variables x 1 , x 2 , x 3 through the free ones x 4 , that is, we found a general solution:

x 3 = x 4

x 2 = - x 4

x 1 = - x 4

;

;

;

;

undefined

undefined